Mirror, mirror on the wall

LLMs Aren't Artificially Intelligent. They're Human Intelligence Fossilized.

Okay, buckle up, this one’s going to go deep.

In Part 1, I hinted that LLMs (Large Language Models such as ChatGPT or Gemini) are not manifestations of intelligence, but re-presentations of it. The hyphen isn't for show, LLMs are presenting existing intelligence again.

In this piece, we’ll explore how LLMs have been miscategorized and are actually not artificial intelligences at all.

Then, we’ll reveal what they truly are in essence, and how that fundamentally changes both the risk map and the opportunities they present.

Finally, we’ll explore how understanding that LLMs are not a path to AGI but a tool completely orthogonal to AI reshapes our understanding of technological progress.

What is a Large Language Model ? #36453rd edition

Let’s start by explaining again what a large language model is at its core.

How to train your LLM

Here’s the recipe :

Collect a huge amount of text from the internet, books, articles, and other private sources. Billions to trillions of words.

Break the text into smaller pieces (like parts of words), based on how often they appear. Add some info about word order so the model knows what came first.

Feed these sequences into a specific kind of neural network called a Transformer. Its job is to predict what comes next. Every time it gets something wrong, it updates its billions of parameters to get better.

As it learns, it builds a semantic map where related ideas end up close together. For example, “apple” ends up near “red,” and “red” might also be near “car”. But “car” and “apple” stay far apart because they rarely show up in the same context.

After training, you don’t need the original text anymore, just the model. It's now ready to generate answers, stories, summaries, and more.

What does it really do?

When you ask an LLM anything, you're asking it to continue that text in a way that fits the patterns it saw during training.

It builds the response one piece at a time (token by token), choosing each next part based on what would statistically make the most sense.

The fact that it can produce coherent responses isn’t something it was directly programmed to do : it’s what’s known as emergent behavior, surprising complexity that arises from simple underlying rules.

What do we end up with then ?

An LLM is essentially a slice of humanity’s past collective thought output imprinted on a mathematical function.

It is fixed, its knowledge is crystallized at the moment training ends.

It will answer based mostly on the strength of existing patterns (thus it stifles creative thinking unless used properly)

It is almost more a cultural object than a technological one.

And there lies my issue with calling it “artificial intelligence” : an LLM is not intelligent in the sense of consciousness, awareness, or reflection. To borrow Foucault’s term, it’s an imprint of an episteme, the invisible framework of knowledge and belief that defined its data.

It models the discursive conditions that shaped how thought was expressed.

It doesn’t reason — it reproduces. It encodes the assumptions, values, and structures of thought that were dominant in the data it consumed.

It isn’t a spaceship, it’s a time machine

The title of this section is a callback to one of the greatest scenes in television history (if you haven’t seen it yet, know that I am very jealous).

In Mad Men, Don Draper is pitching an ad campaign for Kodak’s new slide projector. The clients expect something futuristic as they want to sell newness. Instead, Draper completely reframes what a photo projector truly is and tells them :

“This device isn’t a spaceship, it’s a time machine — it goes backwards, forwards It takes us to a place where we ache to go again.”

That’s what an LLM does, in its own way.

It is not a spaceship.

It will not take us somewhere new, unknown, or truly alien. It’s a time machine. It takes us back through language, across the familiar patterns we have already explored. Sure, it recombines, it can output things that have never been written before, but creation doesn’t live in the mechanical recombination— it lives in the spark between concepts.

When I ask ChatGPT to examine LLMs through the lens of Foucault’s episteme, I am the one creating a conceptual breach across distant semantic fields, a new path of knowledge.

ChatGPT will then fill in the gap and reconnect my novel approach with existing patterns, but it could have never brought together input from semantic fields that are so distant in its training data.

LLMs can’t think. But they can enhance thought. They are meaning-to-meaning machines, but as a human I can also be a noise-to-meaning machine. And that is precisely why collaborating with an LLM after getting strong ideas first often gives way better results than trying to have the LLM brainstorm the idea.

"Not Artificial, Not Intelligent"

An LLM isn’t artificial. The intelligence you see in its answers is real, not because the machine understands, but because language itself carries logic, structure, and meaning, that humans have shaped and repeated over time.

(And this applies to any generative “AI” as a repository of symbols. Text, images, music,… There is always a semiology that is captured through training).What you read when it answers isn’t its own subjective voice : it is ours.

Distilled, compressed, sedimented, repackaged…but unmistakably human.

It isn’t an Other.

It’s us, reflected back.

An LLM isn’t intelligent either. Here is a definition of intelligence from the Cambridge Dictionary :

Intelligence

(noun)

The ability to learn, understand, and make judgments or form opinions based on reason.

Now let’s compare this to LLMs :

Learn: They don’t, not after training ends. As noted in Part 1, learning is frozen by design. There are tricks to simulate updating, which we’ll get into later, but these don’t alter the fundamentals.

Understand: Doubtful. There is no proof that what an LLM performs is different than a Chinese room experiment (more details here, if the reader isn’t familiar with the concept).

Make judgments or form opinions based on reason: LLMs have no opinions. They can convincingly argue any position (atheist, evangelical, anarchist, monarchist,…) with equal skill. They don’t believe what they say. They don’t reason their way to conclusions. They follow the steps other humans have forged in the training data.

They have no goals. No beliefs. No curiosity. They do not evolve, reflect, or desire. They are incapable of contradiction because they do not speak from a self. They have no topos, no grounding — just a vast, probabilistic echo chamber of all the things we’ve ever said.

What we’re seeing here isn’t machine intelligence, but the residue of our own.

This is not an AI

So if it’s neither artificial nor intelligent…

What is a Large Language Model ?

It’s something else entirely, way stranger. And in some ways, more powerful.

An LLM is a proto-noosphere. A conversational portal to a community of human minds.

(A ‘noosphere,’ as coined by Teilhard de Chardin, refers to the sphere of human thought — like a planetary mindscape. LLMs, in this framing, are early interfaces to such a space.)

We do not talk to LLMs, we talk through LLMs to a captured and distilled imprint of our collective intelligence. A fossilized map of knowledge.

So hungry are we to finally stop being alone in the Universe, in our hunger for Otherness, that we haven’t realized that all this time…we’ve been talking to ourselves.

They should not be called AIs.

They should be called IGs, Intelligence Gateways.

Because they don’t think.

They route.

They don’t know.

They expose what we know.

And they’re not minds—but gateways into the collective human archive.

Intelligence Gateways versus Artificial Intelligences

All this deconstruction of LLMs as Artificial Intelligences and reframing as Intelligence Gateways wasn’t done for intellectual sport.

It changes everything: how we understand these systems, the risks they carry, and where they might take us.

Wow, humans pass the Turing Test ? Incredible

So apparently ChatGPT just passed the Turing Test. Impressive, right? For an AI, that would be huge.

But if ChatGPT isn’t actually an AI but an Intelligence Gateway, a system that interfaces directly with our collective thought patterns, then… isn’t that exactly the point ?

At a certain level of fidelity, shouldn’t we expect it to sound like us?

So yeah, LLMs pass the Turing Test.

But that’s not because they’re intelligent.

It’s because we are

It’s not a machine outsmarting us.

It’s us, reflected back, just through a sharper mirror

AGI is a ceiling for LLMs, not a step

I’m convinced that some creators of these Intelligence Gateways have backed themselves into a corner by insisting they're building towards AGI.

For a system that taps into our collective intelligence, reaching "Artificial General Intelligence" isn’t a stepping stone.

It’s a ceiling. A hard one.

And that ceiling is set by the very design of LLMs (ontological limit).

"Passing AGI benchmarks" for an LLM doesn’t mean it’s becoming intelligent.

It means it’s gotten really, really good at retrieving the substantifique moelle (the nourishing marrow) from its training data.

In other words, it’s approaching peak retrieval fidelity.

Even then, it will likely never reach 100%. The last few percent will probably encounter the law of diminishing returns.

Can a true AGI emerge from an LLM ?

No.

The emergence has already happened within the system. That is why it is able to produce coherent language in the first place. It isn’t possible to make emergence happen twice within the same system.

Emergence in natural systems is not mere complexity, it is the creation of new meta-rules that govern lower-level elements in novel ways.

Let’s compare to our physical world : you have atoms that observe certain rules. From the system of atoms emerges the system of molecules. This one also has its rules, and from them emerges in turn life or chemistry with their own new rules.

But LLMs do not do that. They take words (molecules), cut them into bits (atoms), then recombine words (molecules) based on stored emerging rules.

They cannot re-emerge into AGIs because they do not build upwards into new systems. They tunnel downwards, reconstructing existing ones.

LLMs do not climb to higher levels of abstraction. They compress, map, and recombine the existing structures of human language without generating new organizing principles. Without the ascent into a new rule-space, they are fundamentally bounded: remarkable fossils of prior intelligence, not new engines of reasoning.

What about agents and tools ?

Another expected pushback to my argument is that LLMs are being enhanced with tools that seem to overcome their natural limits:

Context windows (short-term memory)

Persistent memory (storing past interactions)

Web search (live access to external information)

Function calling (basic "agent-like" behavior)

Vector stores (customized or personalized knowledge bases)

At first glance, it might look like these upgrades are turning LLMs into something more powerful, maybe even into actual thinking machines.

But none of these change what an LLM is at its core.

These are prosthetics, clever add-ons that make the system more useful to humans, but they don't rewrite the fundamental nature of the model.

Memory simply means silently providing the LLM with reminders of past conversations each time you ask a new question. It's not the model "remembering" anything. It’s just starting from a longer prompt.

Web search, vector stores, and other functions work by attaching an external toolbox. When you make a request, the model compares your words to the descriptions of available tools. If it finds a close enough match, it calls the tool for help, like asking a calculator for math or a browser for the weather.

Crucially, the LLM itself doesn’t perform the action. It waits for the tool’s answer and then rephrases it in human-sounding language.

This isn’t the behavior of an AI agent but of a Natural Language Processing system, something computer science has been building since the 1960s.

All these enhancements make LLMs more convenient, more capable, and and easier to interact with. But they don’t turn them into conscious thinkers, reasoners, or planners.

Where is real AI at right now ?

Reality check: we like to imagine we’re on the brink of creating human-level Artificial Intelligence…

But we can’t even correctly replicate the brain of a worm yet.

Let’s get back into the trenches of current AI simulation and talk about OpenWorm.

OpenWorm is a project to digitally recreate Caenorhabditis elegans, a tiny nematode worm with only 1,000 cells and 302 neurons.

Its entire neural wiring has been mapped since the 1980s. In theory, this should be easy, right ?

Well, after more than a decade of work, we still don’t have a fully functioning digital worm.

So OpenWorm can’t simulate 302 worm neurons but OpenAI could simulate 86 billion human neurons ? It seems there is an irreconcilable gap here. Unless of course LLMs were never truly AIs in the first place.

Reframing the public discourse

Now that we have redefined the essence of LLMs and their limits, let’s look briefly at the consequences.

LLMs are not an accident of history, but a refinement of the Internet

A "gateway to collective intelligence"? The 90s are calling, they want their modem back. Yes, large language models (LLMs) are much more like the Internet than they are like true artificial intelligence.

Just as the Internet serves as a massive, searchable repository of human knowledge, LLMs are built on a similar premise. The difference is that they bring interaction and personalization.

Think of it like writing or the printing press: LLMs are another new form of media, not a breakthrough in artificial intelligence.

It is no accident that LLMs exist now. They needed the Internet to come into being, as the gargantuan amount of human knowledge they require could only have been accumulated through the Web.

Without this massive repository of language, stories, science, and thought, LLMs wouldn’t exist.

Stop talking about AI risks and start acknowledging the IG risks

This is where I think mis-categorizing LLMs as artificial intelligence is the most dangerous.

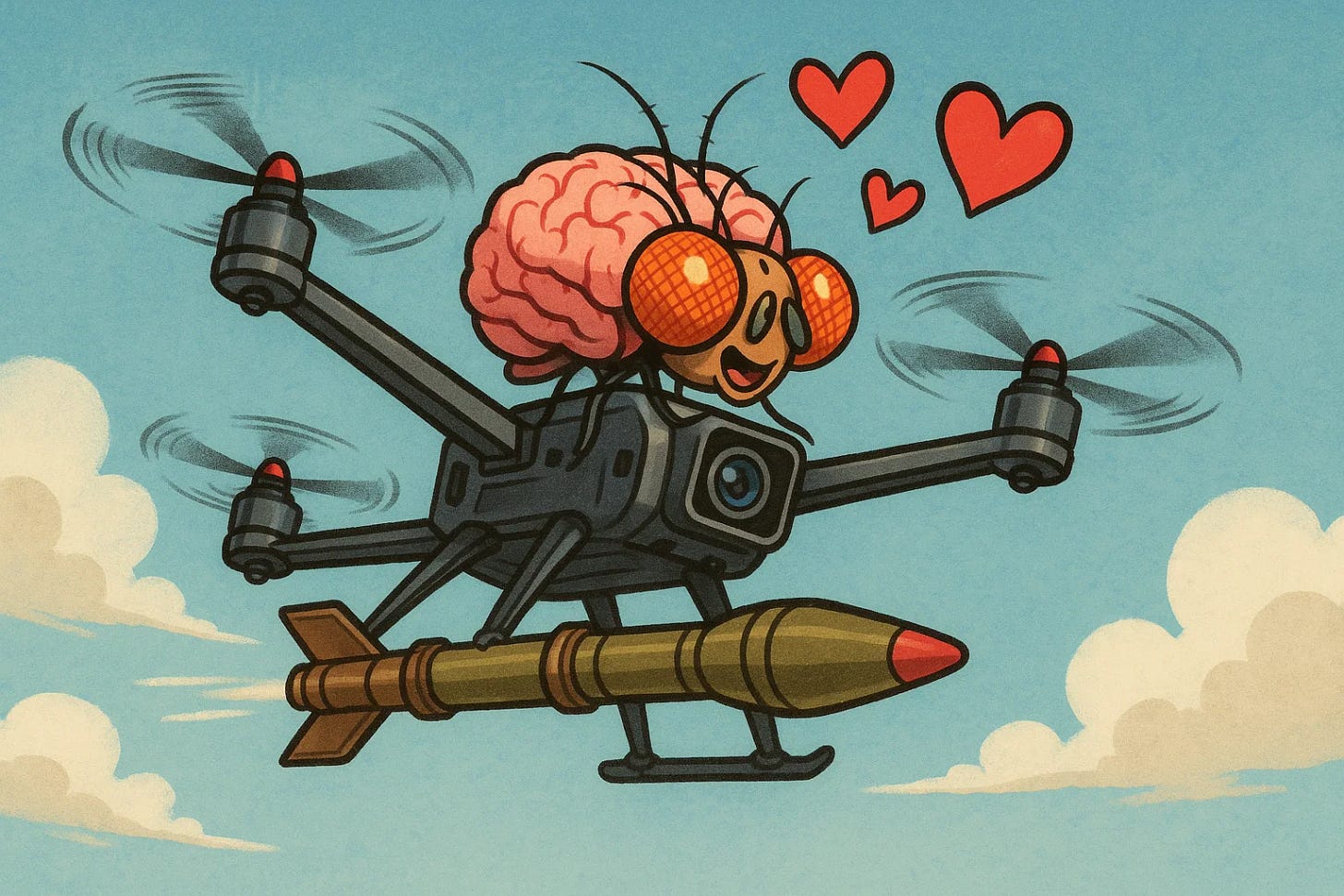

You want to know what an AI risk is? Okay, let’s imagine this:

In 10 years, we succeed in simulating the brain of a fruit fly (140,000 neurons — I’m being optimistic here). Now, imagine I place that simulated brain into a suicide drone and program it to believe that enemy soldiers are yummy fruits.

Here’s your AI risk map:

What if it goes rogue and starts targeting anything that moves?

What if it stops responding (after all, it’s strapped to a bomb)?

What if it develops complex behaviors, like wanting to play or reproduce, based on the brain simulation (imagine a flying bomb that wants to play and make babies — fun, but not ideal)?

These are the kinds of questions we ask when we’re confronted with the potential of AI Agents : entities with goals and the capacity to act independently.

Now, let’s pivot to Intelligence Gateways:

A fundamentally different risk map, and, in some ways, far more dangerous. LLMs (and by extension, IGs) don’t have goals, desires, or autonomy. But that doesn't make them safe.

The real risk lies in how they filter and reflect back our collective knowledge. An IG is a mirror to our thoughts. But whose thoughts, exactly, are we looking at?

Who controls the data that shapes these mirrors?

What assumptions, biases, and blind spots are encoded into the patterns it reflects back to us?

What narratives become amplified, and which ones are drowned out?

This isn’t about machines "taking over", it’s about who owns the gateway to human knowledge. The risk lies in the ability to shape and gatekeep the very fabric of our collective memory and by extension, the way people think tomorrow.

We are already seeing this today with algorithms that curate our news, filter our social media content, and direct our search results. What happens when that power is multiplied and scaled through an Intelligence Gateway : a system that has access to the vast archive of human thought and language, shaping it according to unseen rules?

Who curates our collective repository of intelligence ?

Like the Internet on which it is mostly trained, an LLM :

Is better in English than in other languages.

Reproduces biases and thought processes that are mostly Western and, within that, mostly American.

Favors voices that are louder, more prolific, and more institutionally legitimized, not necessarily more truthful or diverse.

Tends to universalize what is, in fact, highly contextual and situated knowledge.

While they rarely acknowledge it, the builders of LLMs carry a civilizational responsibility in curating the data used to train their models. OpenAI, Anthropic, Mistral, and their peers are not just technologists. They are the custodians of our collective memory, quietly deciding which fragments of human thought are encoded, preserved, and reproduced at scale.

Perhaps because it’s easier to speculate about hypothetical dangers than to confront the very real, present-day power they already wield: the power to shape epistemology itself. The power to define which voices echo into tomorrow, and which are left behind.

In talking so much about the future, they distract us from the stakes of a present where memory, culture, and knowledge are being quietly filtered through opaque pipelines, by institutions that rarely stop to ask: Whose truth are we preserving?

What it means for builders

Using correct terminology and understanding its impact

To call LLMs “not intelligent” is not to insult them, but to celebrate what they really are: symbolic distillations of the most complex and creative intelligence we’ve ever known : ourselves.

An LLM doesn’t learn, it imprints patterns of human thoughts.

An LLM doesn’t think, it processes an input through a mathematical function and returns a result, bit by bit.

An LLM doesn’t answer, it recombines the traces of human intelligence through statistical models.

An LLM doesn’t remember, it relies on passing hidden context (invisible parameters) with every new prompt.

An LLM isn’t an agent, it selects and queries external functions based on semantic similarity.

An LLM doesn’t perform "chain-of-thought", it generates multiple self-prompted outputs, stitched together on command.

Build things that work, not hype

As a builder of Intelligence Gateways :

My role is not to birth baby brains to replace humans.

My role is to create interfaces that make our company's knowledge accessible, understandable, and secure.

My role is not to teach an artificial intelligence.

My role is to be the custodian of our knowledge, keeping it faithful, updated, and useful.

My role is not to deploy "autonomous AI agents."

My role is to reuse existing human intelligence patterns to automate and simplify processes.

My role is not to create a super-AI to handle everything.

My role is to choose the right tool for the right problem, and sometimes, that's an Intelligence Gateway.

Sometimes it’s a decision tree.

Sometimes it’s a dumb regex rule.

And that’s not a failure.

That’s called building.